Test render from a generative art project in development, testing a further iteration of the palette from the previous post.

Mitosis 0.18.6 Rusty Vintage Vehicle Palette (v2) Test

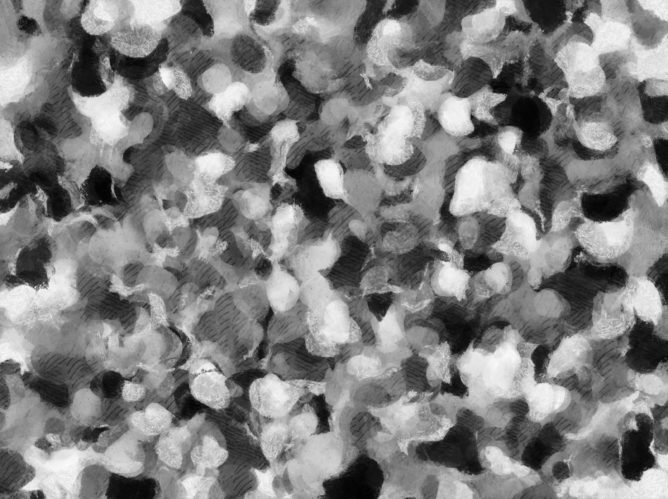

Image bomber coded in Processing, with RND size and slight squish of circles of 25 shades of gray. Worked into painterly layers in Dynamic AutoPainter, blended in Photoshop with layer and color effects.

Also in this post (and not in syndicated posts) : the whole image (not just the crop).

Thumbnails link to larger images, free for personal use.

For explanation of the process that made this, see:

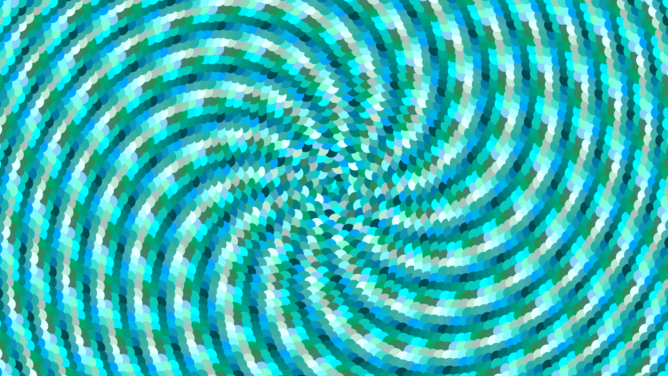

This is from parameter set 2 of a vogel spiral dots Processing language script. The next post may be an animation of this or a link to an animation. Those parameters are:

int backgroundDotSize = 40;

//int foregroundDotSize = 15;

int vogelPointsDistance = 13;

color[] backgroundDotRNDcolors = {

#01EDFD, #00FFFF, #00CCCC, #0CCAB3, #00A693, #009B7D, #008B8B, #008080, #006D6F, #004C54, #007BA7, #0D98BA, #00B7EB, #00A6FE, #3AA8C1, #43B3AE, #3AB09E, #20B2AA, #40E0D0, #7DF9FF, #B2FFFF, #E0FFFF, #C0E8D5, #A8C3BC, #88D8C0, #7FFFD4, #87D3F8, #40826D, #2E8B57, #00A86B

};

— and the canvas or screen size set to HD video in setup() function with this call:

size(1920,1080);

The random seed state for the wiggling of the dots wasn't captured; it is unknown.

The saved images were strung together in an animation with my script ffmpegAnim.sh with these positional parameters:

(script call),18fps source, 30fps target, quality 13, frame image format png:

ffmpegAnim.sh 18 30 13 png

This is a still from parameter set 2 of the recently posted vogel spiral dots Processing language script. The next post may be an animation of this or a link to an animation. Those parameters are:

int backgroundDotSize = 40;

//int foregroundDotSize = 15;

int vogelPointsDistance = 13;

color[] backgroundDotRNDcolors = {

#01EDFD, #00FFFF, #00CCCC, #0CCAB3, #00A693, #009B7D, #008B8B, #008080, #006D6F, #004C54, #007BA7, #0D98BA, #00B7EB, #00A6FE, #3AA8C1, #43B3AE, #3AB09E, #20B2AA, #40E0D0, #7DF9FF, #B2FFFF, #E0FFFF, #C0E8D5, #A8C3BC, #88D8C0, #7FFFD4, #87D3F8, #40826D, #2E8B57, #00A86B

};

— and the canvas or screen size set to HD video in setup() function with this call:

size(1920,1080);

The random seed state for the wiggling of the dots wasn't captured; it is unknown.

The saved images were strung together in an animation with my script ffmpegAnim.sh with these positional parameters:

(script call),18fps source, 30fps target, quality 13, frame image format png:

ffmpegAnim.sh 18 30 13 png

See previous post for description and details.

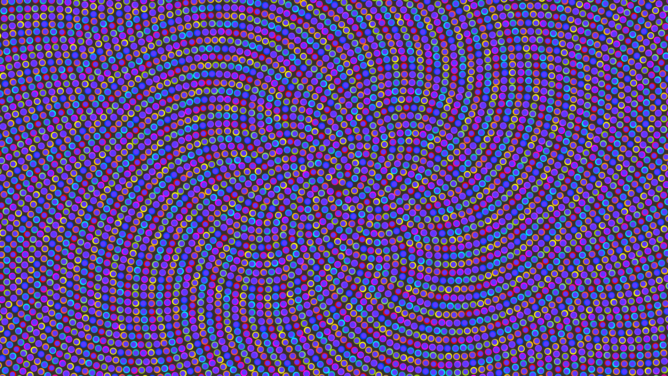

This is the first frame of output from a Processing language script that animates colored dots in a vogel spiral layout. It uses the dawesometoolkit Processing library. A post of the animated result may appear soon where you're seeing this syndicated (if you're lucky); if not, check soon at the source from whence this originates.

The Processing source script is at:

https://github.com/earthbound19/_ebDev/blob/master/scripts/processing/vogel_spiral_dots_animated/vogel_spiral_dots_animated.pde

This publication uses v1.0.0 of that script, with parameter set 1, which is hard-coded in it:

int backgroundDotSize = 20;

int foregroundDotSize = 15;

int vogelPointsDistance = 13;

color[] backgroundDotRNDcolors = {

// tweaked with less pungent and more pastel orange and green, from _ebPalettes 16_max_chroma_med_light_hues_regular_hue_interval_perceptual.hexplt:

#f800fc, #ff0596, #ea0000, #fb5537, #ff9710, #ffc900, #feff06, #a0d901,

#85e670, #0ccab3, #01edfd, #00a6fe, #0041ff, #9937ff, #c830ff

// omitted because it is used for the foreground dot color: #5c38ff

};

— and the canvas or screen size set to HD video in setup() function with this call:

size(1920,1080);

The random seed state for the wiggling of the dots wasn't captured; it is unknown.

The saved images were strung together in an animation with my script ffmpegAnim.sh with these positional parameters:

(script call),18fps source, 30fps target, quality 13, frame image format png:

ffmpegAnim.sh 18 30 13 png

This is output from an image bomber I coded in Processing, worked up in some impressionist and book illustrative-style presets in Dynamic Auto-Painter Pro (a program that tries to make painterly images from any source image). I then did some layering trickery in Photoshop to blend the styles. The sources for the image bomber were circles in 24 shades of gray aligned to human perception of light to dark (white to black), with some random sizing, squishing, stretching and rotating (which is what the image bomber does)

This is output from an image bomber I coded in Processing, worked up in some impressionist and book illustrative-style presets in Dynamic Auto-Painter Pro (a program that tries to make painterly images from any source image). I then did some layering trickery in Photoshop to blend the styles. The sources for the image bomber were circles in 24 shades of gray aligned to human perception of light to dark (white to black), with some random sizing, squishing, stretching and rotating (which is what the image bomber does)

The purpose of images like this, for me, besides being cool by themselves, is to use them as transparency (alpha) layers for either effect or image layers in image editing programs. For alphas, white areas are opaque and black areas show through.

This is my original work and I dedicate it to the Public Domain. Original image size (see syndication source, or if you're looking at the syndication source, click the thumbnail for the full image) : 3200 x 2392

[EDIT: re-watching the animation, I think it's too slow. I'll make future animations around ~2x faster.]

Four HD animations (in one video) of color growths generated from my Python script color_growth.py (see http://s.earthbound.io/colorGrowth). The last stops near completion because at this writing that animation is not complete. This post starts with stills of the completed renders, then links to (or includes?) a YouTube upload of it. If you're seeing this post syndicated, you may have to look at the original post to see the video.

YouTube video:

In batch rendering these animations I found that the renders proceeded far too slowly for my wants (days for one render), even though someone sped up my script a lot: https://github.com/earthbound19/_ebDev/pull/21. Also, the resulting animations had wonky perceptual speed (faster at start, slowing toward middle and end), so I updated the script to overcome that.

I overcame that by adding a –RAMP_UP_SAVE_EVERY_N option. With this option enabled, instead of it saving animation frames every N new painted coordinates, it ramps the number of painted coordinates to wait (before saving a new animation frame) up over time, so that the new rendered area each frame is similar to dragging a selection rectangle from one corner to the other of the canvas. This causes the animation to be perceptually more linear in every growth vector, though technically it's non-linear (and speeding up, or waiting longer between each rendered frame). The result is that renders happen much faster (as fewer frames are saved), and the animation speed seems constant (it no longer seems to slow toward the middle and end. In fact, as it approaches filling corners it seems to race toward them, which is a bit funny and I like it. It still takes a night to render two or three of these, but that's much better than days for one.

(I want to try faster Python interpreters / C transpilers, or a wholesale C port, to see if anything can speed it up much more dramatically.)

For HD animations these have relatively very small file names (only many megabytes, instead of hundreds or thousands of megabytes). I believe it's because video compressors exploit the fact of parts of an image remaining the same, which is always true for an increasingly large _and_ diminishing area in these animations.

YouTube video publication of animation: https://youtu.be/9TVgyB-yYqE

This is via cellular automata from a Python language script I wrote and which another programmer improved. It simulates imaginary bacteria that leave color-mutating waste as they colonize. I posted about this script with other example output in more detail earlier, here:

The python script which generates this and virtually infinite varieties of works like it is at: http://s.earthbound.io/colorGrowth

Content of source settings file 2019_10_04__22_46_35__c326e1_colorGrowth-Py.cgp:

–WIDTH 1920 –HEIGHT 1080 -a 175 -q 2 –RECLAIM_ORPHANS 1 -n 1 –RSHIFT 5 -b [252,251,201] -c [252,251,201] –BORDER_BLEND True –TILEABLE False –STOP_AT_PERCENT 1 –RANDOM_SEED 2005294276 –GROWTH_CLIP (0,5) –SAVE_PRESET True

This work and the video, I dedicate to the Public Domain.

These images and this animation (there may only be one image if you're reading a syndicated post) are ways of representing snapshots and evolution of source code. The source code of the second image is version 1.2.0 of a Processing script (or program or code) which produces generative art. At this writing, a museum install of that generative-art-producing-program is spamming twitter to death (via twitter.com/earthbound19bot) every time a user interacts with it. The generative art is entitled "By Small and Simple Things" (is twitter overwhelmed) generative art (see earthbound.io/blog/by-small-and-simple-things-digital-generative/).

How did I represent the source code of a generative art program as an image? There are ways. Another word for creating images from arbitrary data is "data bending," meaning taking data intended for one purpose and using it or representing it via other common ways of using data. One form of data bending is text to image; that's what I do here.

But the ways to represent code as a "data bent" image which I found when I googled it, I didn't like, so I made my own.

The approach I don't like is to take every three bytes (every 8 zeros or ones) in a source and turn them into RGB values (three values from 0 to 255 for Red, Green and Blue–the color components of almost all digital images you ever see on any screen). Conceptually that doesn't model anything about the data as an image other than confused randomness, and aesthetically, it mostly makes random garish colors (I go into the problems of random RGB colors in this post: earthbound.io/blog/superior-color-sort-with-ciecam02-python/).

A way I like better to model arbitrary data as an image is to map the source data bytes into _one channel_ of RGB, where that one channel fluctuates but the others don't. This has the effect of gauging low and high data points by color intensity or limited variation. In these data bent images here, the green and blue values don't change, but the red ones do. Green is zero, blue is full, and the changes in the source data (mapped to red) make the blue alternate from blue (all blue, no red) to violet (all blue, all red).

My custom script that maps data to an image creates a PPM image from any source data (PPM is a text file that describes pixels). The PPM can be converted to other formats by many image tools (including command line tools and photoshop). This data to image script is over here: github.com/earthbound19/_ebDev/blob/master/scripts/imgAndVideo/data_bend_2PPMglitchArt00padded.sh

Again, the first image here (or maybe not if you're reading a syndicated copy of this post) is the first version of By Small and Simple Things. The second image is from the latest (at this writing). The animation is everything in between, assembled via this other script over here: github.com/earthbound19/_ebArt/blob/master/recipes/mkDataBentAnim.sh

To generate random irregular geometry like in these images (for brainstorming art), 1) install Processing http://processing.org/download and 2) download this script I wrote for it https://github.com/earthbound19/_ebDev/blob/master/processing/by_me/rnd_irregular_geometry_gen/rnd_irregular_geometry_gen.pde, then 3) press the "play" (triangle/run) button. It generates and saves pngs and svgs as fast as it can make them. Press the square (stop) button to stop the madness. I dedicate this Processing script and all the images I host generated by it to the Public Domain. The first two images here (you may only see one image if you read a syndication of this post) are tear or contact (many images) sheets from v1.9.16 of the script. Search URL to bring up galleries of output from this script: http://earthbound.io/q/search.php?search=1&query=rnd_irregular_geometry_gen

You probably can't reasonably copyright immediate output from this script, as anyone else can generate the same thing via the same script if they use the same random seed. But you can copyright modifications you make to the output.

[Syndicated post–if you don't see multiple images in this, open the given archival URL to the original post to see more images. Not sure I've figured out how to syndicate gallery posts yet..]

Last night I threw wonky parameters at version 1.6.1 of this work:

https://earthbound.io/blog/by-small-and-simple-things-digital-generative/

–which at this writing is in a museum, and which I have updated since to include shapes other than circles).

These images and a pending video result.