For explanation of the process that made this, see:

Tag: Python

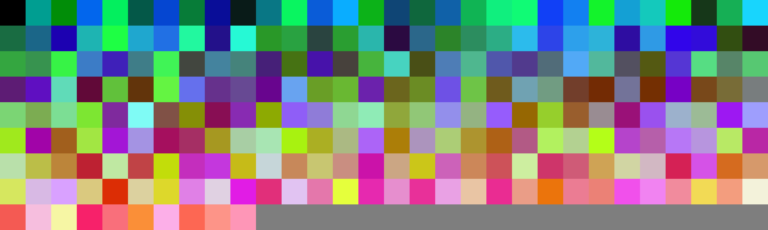

color growth animations 20e406 329bfc e33909 a370a7

[EDIT: re-watching the animation, I think it's too slow. I'll make future animations around ~2x faster.]

Four HD animations (in one video) of color growths generated from my Python script color_growth.py (see http://s.earthbound.io/colorGrowth). The last stops near completion because at this writing that animation is not complete. This post starts with stills of the completed renders, then links to (or includes?) a YouTube upload of it. If you're seeing this post syndicated, you may have to look at the original post to see the video.

YouTube video:

In batch rendering these animations I found that the renders proceeded far too slowly for my wants (days for one render), even though someone sped up my script a lot: https://github.com/earthbound19/_ebDev/pull/21. Also, the resulting animations had wonky perceptual speed (faster at start, slowing toward middle and end), so I updated the script to overcome that.

I overcame that by adding a –RAMP_UP_SAVE_EVERY_N option. With this option enabled, instead of it saving animation frames every N new painted coordinates, it ramps the number of painted coordinates to wait (before saving a new animation frame) up over time, so that the new rendered area each frame is similar to dragging a selection rectangle from one corner to the other of the canvas. This causes the animation to be perceptually more linear in every growth vector, though technically it's non-linear (and speeding up, or waiting longer between each rendered frame). The result is that renders happen much faster (as fewer frames are saved), and the animation speed seems constant (it no longer seems to slow toward the middle and end. In fact, as it approaches filling corners it seems to race toward them, which is a bit funny and I like it. It still takes a night to render two or three of these, but that's much better than days for one.

(I want to try faster Python interpreters / C transpilers, or a wholesale C port, to see if anything can speed it up much more dramatically.)

For HD animations these have relatively very small file names (only many megabytes, instead of hundreds or thousands of megabytes). I believe it's because video compressors exploit the fact of parts of an image remaining the same, which is always true for an increasingly large _and_ diminishing area in these animations.

Color Growth 2019-10-04 22-46-35 c326e1

YouTube video publication of animation: https://youtu.be/9TVgyB-yYqE

This is via cellular automata from a Python language script I wrote and which another programmer improved. It simulates imaginary bacteria that leave color-mutating waste as they colonize. I posted about this script with other example output in more detail earlier, here:

The python script which generates this and virtually infinite varieties of works like it is at: http://s.earthbound.io/colorGrowth

Content of source settings file 2019_10_04__22_46_35__c326e1_colorGrowth-Py.cgp:

–WIDTH 1920 –HEIGHT 1080 -a 175 -q 2 –RECLAIM_ORPHANS 1 -n 1 –RSHIFT 5 -b [252,251,201] -c [252,251,201] –BORDER_BLEND True –TILEABLE False –STOP_AT_PERCENT 1 –RANDOM_SEED 2005294276 –GROWTH_CLIP (0,5) –SAVE_PRESET True

This work and the video, I dedicate to the Public Domain.

Color growth from virtual bacteria (generative)

What happens if virtual bacteria emit color-mutating waste as they colonize? This 16 megapixel thing happens.

[Later edit: and many other things. I have done many renders from this script, and evolved its functionality over time.]

Inspired by this computer generated contemporary art post (and after I got the script to work and posted it here), I wondered what the visual result would be from an algorithm like this:

– paint a canvas with a base color

– pick a random coordinate on it

– mutate the color at that coordinate a bit

– randomly walk in any direction

– mutate a bit from the previous color, then drop that color there

– repeat (but don't repeat on already used coordinates)

– if all adjacent coordinates have been colored, pick a new random coordinate on the canvas [later versions of the script, which has evolved over time: OR DIE]

– repeat (this is less necessary if the virtual bacteria colonize: ) [OR DON'T, as other "living" coordinates will repeat the process

– [Later script versions: activate orphan coordinates that no bacteria ever reached, and start the process with them.]

Then I wondered what happens if the bacteria duplicate–if they create mutated copies of themselves which repeat the same thing, so that you get spreading colonies of color-pooping bacteria.

I got a python script working that accomplished this, and while with great patience it produced amazing output, I was frustrated with the inefficiency of it (a high resolution render took a day), and wondered how to make it faster.

Someone with the username "scribblemaniac" at github apparently took notice of image posts I made linking to this script, and they figured out how to speed it up by many orders of magnitude, and opened a pull request with a new version of the script. (They also added features. And used a new file name. I merged the request.) [Edit: I later merged their script over the original name, and copied my original script to color_growth_v1.py.] The above image is from the new version. It took ~7 minutes to render. The old version would have taken maybe 2 days. (If the link to the new version goes bad it's because I tested and integrated or copied the new version over the old file name).

In a compiled language, it might be much faster.

I did all this unaware that someone else won a "code golf" challenge by coming up with the same concept, except using colors differently. (There are all kinds of gorgeous generative art results in various answers there!–go have fun and get lost in them!) Their source code is down and forsaken apparently, but someone in the comments describes speeding up the process in several languages and ultimately making a lighting fast C++ program, the source of which is over here. Breathtaking output examples are over here. Their purpose is slightly different: use all colors in the RGB color space. Their source could probably be tweaked to use all colors from a list.

Here are other outputs from the program (which might now show up in syndicated posts–look up the original post URL given.)

These are from randomly chosen RGB colors, which, as I went into in another post, tend to produce horrible color combinations. Le sigh, random pick from CIECAM02 space might be awesome..

I dedicate all the images in this post to the Public Domain.

Superior color sort with CIECAM02 + Python

[UPDATE: there's a lot more to light and color science than I perhaps inaccurately get at in this post. Also, try color transforms and comparisons (if the latter is possible?) in Oklab.]

It turns out that all of the digital color models in wide use are often bad for figuring out which of any two colors are "nearest," according to humans.

Sometime in my web meanderings, I stumbled on information about the CIECAM02 color model (and space), including a Python library that uses it and a (gee-wow astonishing at what it can do with color) free Photoshop-compatible plugin that manipulates images in that space. [EDIT 2020-10-07: link to that plugin down and I can't find the plugin on the open web anymore. Here's a link to my own copy of it (in a .zip archive)] If you do color adjustments on images using an application that's compatible with Photoshop plugins (a lot of programs are), go get and install that plugin now! Also: a CIECAM02 color space browser app (alas, Windows only it seems?).

I wrote a Python script that uses that library to sort any list of RGB colors (expressed in hex) so that every color has the colors most similar to it next to it. (Figuring out an algorithm that does this broke my brain–I guess in a good way.) (I also wrote a bash script that runs it against all .hexplt files (a palette file format which is one RGB hex color per line) in a directory.)

The results are better than any other color sorting I've found, possibly better than what very perceptive humans could accomplish with complicated arrays of color.

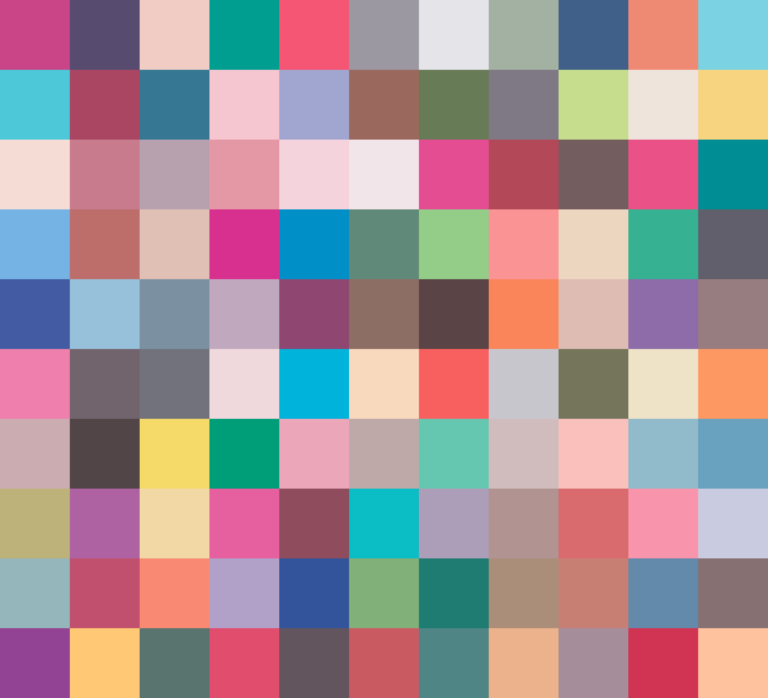

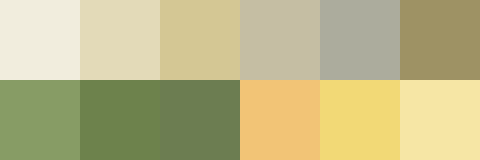

Here's an image of Prismacolor marker colors, in the order that results from sorting by this script (the order is left to right, top to bottom) :

For before/after comparison, here is an image from the same palette, but randomly sorted; the script can turn this ordering of the palette into the above much more contiguous-appearing:

(It's astonishing, but it seems like any color in that palette looks good with any other color in it, despite that the palette comprises every basic hue, and many grays and some browns. They know what they are doing at Prismacolor. I got this palette from my cousin Daniel Bartholomew, who uses those colors in his art, which you may see over here and here.)

Some other palettes which I updated by sorting them with this script are on display in my GitHub repo of collected color palettes.

Here is another before and after comparison of 250 randomly generated RGB colors sorted by this script. You might correctly guess from this that random color generation in the RGB space often produces garish color arrays. I wonder whether random color generation somehow done in a model more aligned with human perception (like CIECAM02) would produce more pleasing results.

See how it has impressive runs of colors very near each other, including by tint or shade, and good compromises when colors aren't near, with colors that are perceptually further from everything at the end. Also notice that darker and lighter shades of the same hue tend to go in separate lighter/darker runs–with colors that well interpolate into those runs in between!–instead of having lights and darks in the same run, where the higher difference of tint/shade would introduce a discontiguous aspect.

Tangent: in RGB space, I tested a theory that a collection of colors which add (or subtract!) to gray will generally be a pleasing combination of colors–and found this to be often true. I would like to test this theory in the CIECAM02 color space. I'd also like to test the theory that colors randomly generated in the CIECAM02 space will generally be more pleasing alone and together (regardless of whether they were conceived as combining to form gray).

I really can't have those as the last images in this post. Here is a favorite palette.

Here's the URL to that palette (in my palette repository).

[Edit 2020-10-07: I had renamed or moved several things I linked to from this post, which broke links. I corrected the links after a reader kindly requested to know where things had gone.]