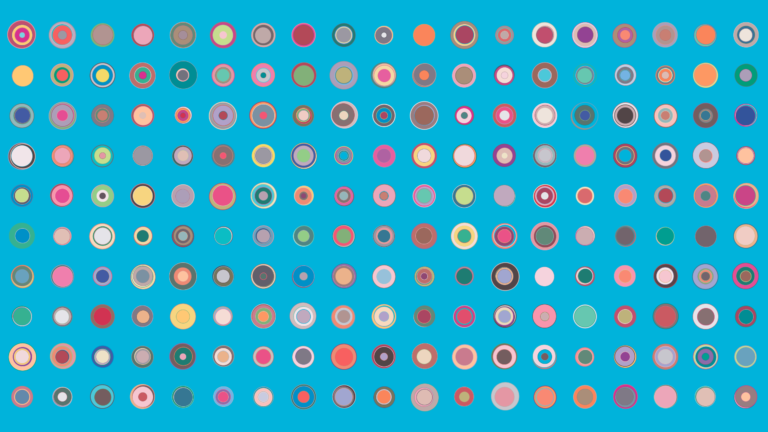

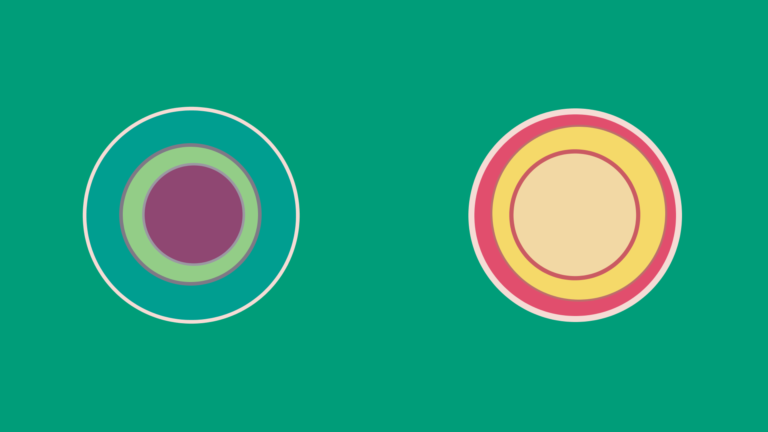

This is from parameter set 2 of a vogel spiral dots Processing language script. The next post may be an animation of this or a link to an animation. Those parameters are:

int backgroundDotSize = 40;

//int foregroundDotSize = 15;

int vogelPointsDistance = 13;

color[] backgroundDotRNDcolors = {

#01EDFD, #00FFFF, #00CCCC, #0CCAB3, #00A693, #009B7D, #008B8B, #008080, #006D6F, #004C54, #007BA7, #0D98BA, #00B7EB, #00A6FE, #3AA8C1, #43B3AE, #3AB09E, #20B2AA, #40E0D0, #7DF9FF, #B2FFFF, #E0FFFF, #C0E8D5, #A8C3BC, #88D8C0, #7FFFD4, #87D3F8, #40826D, #2E8B57, #00A86B

};

— and the canvas or screen size set to HD video in setup() function with this call:

size(1920,1080);

The random seed state for the wiggling of the dots wasn't captured; it is unknown.

The saved images were strung together in an animation with my script ffmpegAnim.sh with these positional parameters:

(script call),18fps source, 30fps target, quality 13, frame image format png:

ffmpegAnim.sh 18 30 13 png